Deadlock

Avoidance

The general idea behind deadlock avoidance

is to prevent deadlocks from ever happening. This requires more information

about each process, and tends to lead to low device utilization. In some

algorithms the scheduler only needs to know the maximum number

of each resource that a process might potentially use. When a scheduler sees

that starting a process or granting resource requests may lead to future

deadlocks, then that process is just not started or the request is not granted.

A resource allocation state is defined by the number of

available and allocated resources, and the maximum requirements of all

processes in the system.

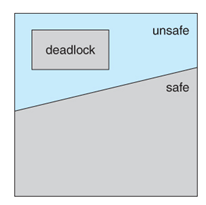

1. Safe State

A state is safe if

the system can allocate all resources requested by all processes without

entering a deadlock state. Formally, a state is safe if there exists a safe

sequence of processes { P0, P1, P2, ..., PN } such that

all of the resource requests for Pi can be granted using the resources

currently allocated to Pi and all processes Pj where j < i.

If a safe sequence does not

exist, then the system is in an unsafe state, which may lead to

deadlock. All safe states are deadlock free, but not all unsafe states lead to

deadlocks.

Example:

|

|

Maximum Needs

|

Current

Allocation

|

|

P0

|

10

|

5

|

|

P1

|

4

|

2

|

|

P2

|

9

|

2

|

Consider a system with 12 tape

drives, allocated as above. Is this a safe state? What is the safe sequence?

The required are 9 (5+2+2),

available are 3 (12-9). The system is in safe state. The

sequence<P1,P0,P2> satisfies the safety condition. P1 can be allocated

all resources and release all( available are 5) then P0 can be allocated and

release(available 10) and finally P2(12 remaining).

2. Resource-Allocation Graph algorithms

If resource categories have only

single instances of their resources, then deadlock states can be detected by

cycles in the resource-allocation graphs. Unsafe states can be recognized and

avoided by adding to the resource-allocation graph with claim edges,

noted by dashed lines, which point from a process to a resource that it may

request in the future. A claim edge Pi → Rj indicates

that a process Pi may request resource Rj in future.

When a process makes a request,

the claim edge Pi->Rj is converted to a request edge. Similarly when a

resource is released, the assignment reverts back to a claim edge. This

approach works by denying requests that would produce cycles in the

resource-allocation graph, taking claim edges into effect.

Example: Consider resource

allocation graph

What happens when process P2

requests resource R2:

The resulting resource-allocation

graph would have a cycle in it, and so the request cannot be granted.

3. Banker's

Algorithm

For resource categories that

contain more than one instance the resource-allocation graph method does not

work, and more complex methods must be chosen. Banker’s algorithm is applicable

to such system but less efficient.

The Banker's Algorithm gets its

name because it is a method that bankers could use to assure that when they

lend out resources they will still be able to satisfy all their clients.

When a process starts up, it must

state in advance the maximum allocation of resources it may request, up to the

amount available on the system. When a request is made, the scheduler

determines whether granting the request would leave the system in a safe state.

If not, then the process must wait until the request can be granted safely.

The banker's algorithm relies on

several key data structures: ( where n is the number of processes and m is the

number of resource categories. )

- Available[

m ] indicates how many resources are currently available of each type.

- Max[

n ][ m ] indicates the maximum demand of each process of each resource.

- Allocation[

n ][ m ] indicates the number of each resource category allocated to each

process.

- Need[

n ][ m ] indicates the remaining resources needed of each type for each

process. ( Note that Need[ i ][ j ] =

Max[ i ][ j ] -

Allocation[ i ][ j ] for all i, j. )

a. Safety

Algorithm

In order to apply the Banker's

algorithm, we first need an algorithm for determining whether or not a particular

state is safe. This algorithm determines if the current state of a system is

safe, according to the following steps:

- Let

Work and Finish be vectors of length m and n respectively.

- Work is a working copy of

the available resources, which will be modified during the analysis.

- Finish is a vector of

booleans indicating whether a particular process can finish. ( or has

finished so far in the analysis. )

- Initialize Work to

Available, and Finish to false for all elements.

- Find

an i such that both

(A)

Finish[ i ] == false, and

(B)

Need[ i ] < Work.

If

no such i exists, go to step 4.

- Set

Work = Work + Allocation[ i ], and set Finish[ i ] to true. This

corresponds to process i finishing up and releasing its resources back

into the work pool. Then loop back to step 2.

- If

finish[ i ] == true for all i, then the state is a safe state, because a

safe sequence has been found.

b. Resource

Request algorithm (Banker’s algorithm)

This algorithm determines if a

new request is safe, and grants it only if it is safe to do so. When a

request is made ( that does not exceed currently available resources ), pretend

it has been granted, and then see if the resulting state is a safe one.

If so, grant the request, and if

not, deny the request, as follows:

1.

Let

Request[ n ][ m ] indicate the number of resources of each type currently

requested by processes. If Request[ i ] > Need[ i ] for any process i, raise

an error condition.

2.

If

Request[ i ] > Available for any process i, then that process must wait for

resources to become available. Otherwise the process can continue to step 3.

3.

Check

to see if the request can be granted safely, by pretending it has been granted

and then seeing if the resulting state is safe. If so, grant the request, and

if not, then the process must wait until its request can be granted safely. The

procedure for granting a request ( or pretending to for testing purposes ) is:

- Available = Available -

Request

- Allocation = Allocation +

Request

- Need = Need - Request