Paging

Paging is a memory management scheme that allows processes physical memory to be non continuous, and which eliminates problems with external fragmentation by allocating memory in equal sized blocks known as pages. Paging eliminates is the predominant memory management technique used today.

The basic idea behind paging is to divide physical memory into a number of equal sized blocks called frames, and to divide a programs logical memory space into blocks of the same size called pages. Any page (from any process) can be placed into any available frame. The page table is used to look up for the stored frame of a particular page.

A logical address consists of two parts: A page number (p) in which the address resides, and an offset (d) from the beginning of that page. The page number is used as an index into a page table. The page table contains the base address of each page in the physical memory. This base address is combined with the page offset to define the physical memory address that is sent to the memory unit.

The page table maps the page number to a frame number, to yield a physical address which also has two parts: The frame number and the offset within that frame. The number of bits in the frame number determines how many frames the system can address, and the number of bits in the offset determines the size of each frame.

Page numbers, frame numbers, and frame sizes are determined by the architecture, but are typically powers of two, allowing addresses to be split at a certain number of bits.

In paging there is no external fragmentation but may have internal fragmentation. In worst case, a process would need ‘n’ pages plus 1 byte, it would be allocated n+1 frames resulting internal fragmentation.

When a process requests memory, free frames are allocated from a free-frame list, and inserted into that process's page table.

Processes are blocked from accessing anyone else's memory because all of their memory requests are mapped through their page table. There is no way for them to generate an address that maps into any other process's memory space.

The operating system must keep track of each individual process's page table, updating it whenever the process's pages get moved in and out of memory, and applying the correct page table when processing system calls for a particular process. This all increases the overhead involved when swapping processes in and out of the CPU.

Hardware Support

Page lookups must be done for every memory reference, and whenever a process gets swapped in or out of the CPU, its page table must be swapped in and out too, along with the instruction registers, etc. It is therefore appropriate to provide hardware support for this operation, in order to make it as fast as possible and to make process switches as fast as possible also.

The hardware implementation of the page table can be done in several ways:

One option is to use a set of registers for the page table. For example, the DEC PDP-11 uses 16-bit addressing and 8 KB pages, resulting in only 8 pages per process.

An alternate option is to store the page table in main memory, and to use a single register (called the page-table base register, PTBR ) to record where in memory the page table is located. Process switching is fast, because only the single register needs to be changed.

However memory access just got half as fast, because every memory access now requires two memory accesses - One to fetch the frame number from memory and then another one to access the desired memory location.The solution to this problem is to use a very special high-speed memory device called the translation look-aside buffer, TLB. The benefit of the TLB is that it can search an entire table for a key value in parallel, and if it is found anywhere in the table, then the corresponding lookup value is returned.

The TLB is very expensive, however, and therefore very small. It is therefore used as a cache device.

The TLB is used with page table in the following manner: when a logical address is generated by the CPU, its page number is presented to the TLB. If the info is not there (a TLB miss), then the frame is looked up from main memory and the TLB is updated. If the TLB is full, then replacement strategies range from least-recently used, LRU to random. Some TLBs allow some entries to be wired down, which means that they cannot be removed from the TLB. Typically these would be kernel frames.

The percentage of time that the desired information is found in the TLB is termed the hit ratio.

For example, suppose that it takes 100 nanoseconds to access main memory, and only 20 nanoseconds to search the TLB. So a TLB hit takes 120 nanoseconds total ( 20 to find the frame number and then another 100 to go get the data ), and a TLB miss takes 220 ( 20 to search the TLB, 100 to go get the frame number, and then another 100 to go get the data. ).

So with an 80% TLB hit ratio, the average memory access time would be:

0.80 * 120 + 0.20 * 220 = 140 nanoseconds

for a 40% slowdown to get the frame number.

A 98% hit rate would yield 122 nanoseconds average access time ( 0.98*120+0.02*220), for a 22% slowdown.

Protection

Memory protection in a paged environment is accomplished by protection bits associated with each frame. A bit or bits can be added to the page table to classify a page as read-write, read-only, read-write-execute, or some combination of these sorts of things. Then each memory reference can be checked to ensure it is accessing the memory in the appropriate mode.

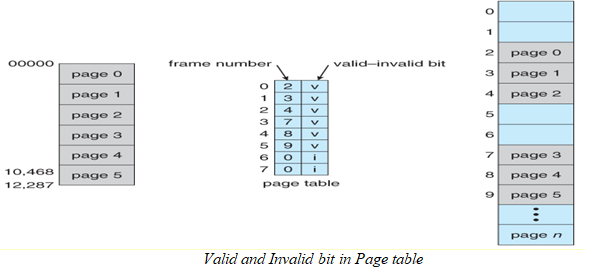

One additional bit (valid-invalid bit) is attached to each entry in the page table. If bit is set to valid, the associated page is in the process’s logical address space. If the bit is set to invalid, the page is not in the process’s logical address space.

Many processes do not use all of the page table available to them, particularly in modern systems with very large potential page tables. Rather than waste memory by creating a full-size page table for every process, some systems use a page-table length register, PTLR, to specify the length of the page table.

Shared Pages

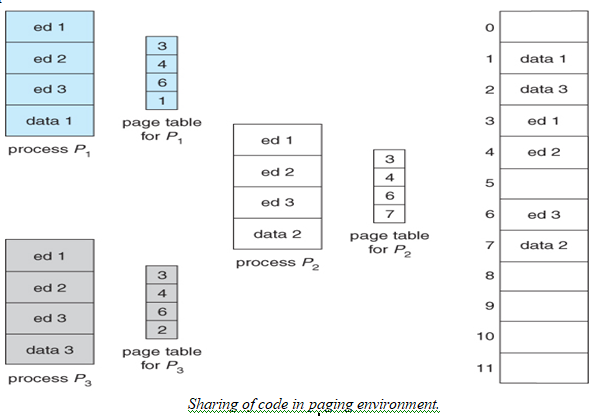

An advantage of paging is the possibility of sharing common code. This is important in time sharing environment. Paging systems can make it very easy to share blocks of memory, by simply duplicating page numbers in multiple page frames. This may be done with either code or data. If code is reentrant, that means that it does not write to or change the code in any way ( it is non self-modifying ), and it is therefore safe to re-enter it, then the code can be shared by multiple processes.

No comments:

Post a Comment